Table of Contents

Summarize and analyze this article with

Introduction

Bank mergers reshape industry landscapes, expand market reach, and unlock new service capabilities. However, when two banks combine, the battle is often won or lost in the data. Data migration during bank mergers remains one of the most complex and risk-laden efforts in financial operations. The value of the merger itself depends on how well a bank handles banking data migration, integrates systems, maintains compliance, and delivers continuity to customers.

In 2026, global mergers and acquisitions activity is expected to remain strong as dealmakers build on the rebound in 2025 and anticipate sustained momentum across sectors. Financial institutions are increasingly focused on technology and data consolidation to support growth and resilience. Data migration in such deals is no longer an afterthought; it is a core strategic effort that determines whether the merged entity operates effectively or falters under legacy burdens.

This blog explores the hard reality of bank merger data challenges, shows why meticulous planning matters, and outlines how to execute m&a data migration banking reliably.

Why Data Migration Is the Central Challenge in Bank Mergers

When a bank acquisition goes public, leadership, finance, and strategy teams dominate the conversation. Less visible, but equally critical, is the technical work of migrating data. Banks operate millions of customer accounts, transaction histories, compliance logs, and risk records. Each dataset carries regulatory and operational weight.

The task is not simply moving files. It is about:

- Maintaining data integrity

- Protecting customer information

- Preventing operational disruption

- Ensuring regulatory compliance

A failed migration can translate to account errors, compliance penalties, or significant service downtime.

Industry research highlights that more than 60 percent of all financial merger migration projects face costly setbacks when data quality issues are discovered late in the process. This is especially true in core banking migration, where incompatible data models and legacy systems create obstacles that simple tools can’t resolve.

In addition to internal business risk, external pressures mount from regulators and customers. Banks cannot afford system failures that lead to public scrutiny.

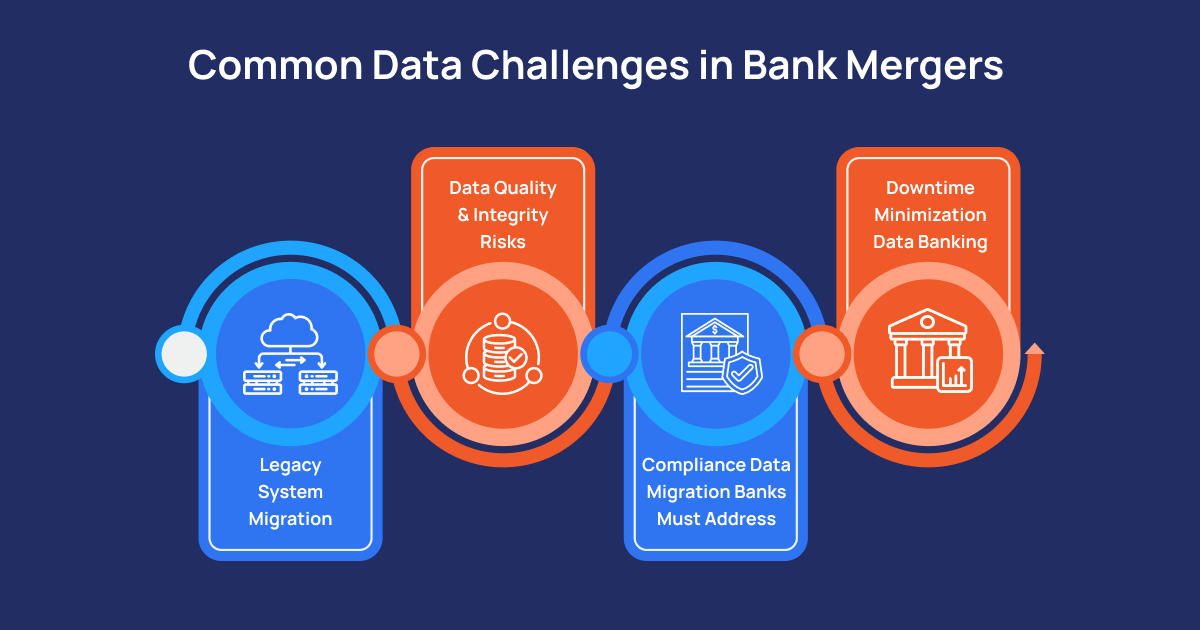

Common Data Challenges in Bank Mergers

1. Legacy System Migration

- Data schema mismatches

- Outdated data structures

- Lack of documentation

- Unsupported platforms

2. Data Quality and Integrity Risks

3. Compliance Data Migration Banks Must Address

Every piece of banking data has regulatory implications. The migration process must preserve audit trails, maintain privacy classifications, and protect data in motion.

Financial regulators in the U.S., EU, and APAC now require detailed data lineage documentation and migration validation reports for all mergers. Failure to show comprehensive secure data migration finance protocols can delay approval or draw penalties.

Maintaining compliance also intersects with customer protections. Each record must be preserved with full fidelity, ensuring no loss of transaction history or contractual information.

4. Downtime Minimization Data Banking

Bank customers expect continuous access to accounts, payments, and services. Even short outages can erode trust and increase operational costs.

Avoiding downtime during banking IT merger data migration is critical. Techniques such as phased migration, parallel runs, and incremental cut-overs help minimize service impact. However, these methods require detailed testing, rollback plans, and real-time monitoring.

Failing to minimise downtime not only creates customer service issues but can trigger penalties in regions with strict uptime regulations.

Proven Strategies for Successful Bank M&A Data Integration

1. Comprehensive Early Data Assessment

2. Phased Migration and Parallel Operations

Moving all data in a single “big bang” rarely works. Phased migration lets teams test segments incrementally, validate results, and adjust plans before proceeding.

Parallel operations, where both old and new systems run concurrently for a period, offer an additional safety net. This approach ensures business continuity and gives compliance teams time to review outputs without pressure.

A controlled phase approach aligns tightly with bank m&a data integration best practices recommended by both industry leaders and regulatory guidance.

3. Strong Governance and Ownership

- Data standards

- Roles and responsibilities

- Escalation paths

- Audit checkpoints

4. Modern Tools and Cloud Platforms

- Data validation pipelines

- Real-time monitoring

- Secure transfer protocols

- Scalability

Cloud-native tools also make it easier to maintain secure, documented environments that satisfy regulatory scrutiny during merger consolidation.

5. Post-Migration Reconciliation and Validation

- Verification of transactional history

- Reconciliation of balances

- Reporting checks

- Compliance validation

These efforts ensure the merged bank can operate from day one with confidence.

Emerging Trends in Banking Data Migration (2026)

- AI-Driven Validation: AI tools now help detect data anomalies during migration faster than traditional scripts.

- Automated Lineage Tracking: Automated tracking provides real-time lineage reports that satisfy regulators instantly.

- Secure Multi-Cloud Architectures: Banks are moving toward hybrid cloud solutions to balance performance, security, and compliance.

Conclusion

Data migration in bank mergers is not a checklist item. It is the backbone of operational success, customer trust, and compliance integrity. With a clear strategy, disciplined assessment, phased execution, and modern tooling, banks can confidently manage even the most complex M&A data migration initiatives.Detailed planning reduces risk, elevates execution confidence, and positions the merged bank for future growth.

Merging banks? Don’t let data migration become the point of failure.

PiTech helps financial institutions execute secure, compliant, and low-risk data migrations without downtime, data loss, or regulatory surprises.

Key Takeaways

- Data migration decides merger success or failure : In bank mergers, poor data integration creates more risk than financial or operational alignment issues.

- Legacy systems are the biggest hidden obstacle : Incompatible core banking platforms, undocumented logic, and outdated formats slow migrations and increase failure rates.

- Data quality issues surface late— and cost the most. Duplicate records, missing histories, and inconsistent identifiers often appear after cut-over, driving rework and downtime.

- Compliance cannot be fixed after migration: Audit trails, lineage, and data security must be preserved throughout the migration to avoid regulatory delays or penalties.

- Downtime is a business risk, not just a technical one : Even brief outages erode customer trust and can trigger regulatory consequences.

- Phased, well-governed migrations reduce risk dramatically : Early data assessment, parallel runs, strong ownership, and modern cloud tooling lead to stable post-merger operations.

Frequently Asked Questions (FAQs)

What are the biggest data compatibility issues when merging two banks’ systems?

How do banks ensure data consistency across legacy and modern platforms during mergers?

What strategies prevent data loss in financial data migration projects?

Banks prevent data loss through full data audits, incremental backups, checksum validation, transaction logging, and parallel testing. These measures ensure every record is tracked and verified throughout the migration lifecycle.

How do banks handle security and compliance when integrating data from acquired banks?

Security and compliance are maintained using encryption in transit and at rest, role-based access controls, detailed audit trails, and strict adherence to financial regulations. Clear data lineage and documentation support regulatory reviews.

What causes operational disruptions during bank merger data migrations?

Disruptions are typically caused by incomplete data mapping, legacy system conflicts, insufficient testing, late discovery of data quality issues, and weak governance during execution.