Table of Contents

Summarize and analyze this article with

Introduction

Why Generative AI Poses Unique Risks for Banks

Practical GenAI Use Cases That Work in Banking

Despite the risks, banking GenAI use cases are delivering measurable value when implemented with proper controls. Banks are using generative AI banking tools to enhance customer service by summarising interactions, assisting agents in real time, and enabling personalized banking AI experiences without exposing sensitive data.

In fraud detection, GenAI models analyse transaction patterns alongside traditional rule-based systems to flag anomalies faster. This strengthens GenAI fraud banking capabilities while retaining human oversight and proven controls. Credit risk teams use GenAI to support, not replace, credit scoring by analysing unstructured data such as financial statements and customer communications, improving accuracy while reducing manual effort.

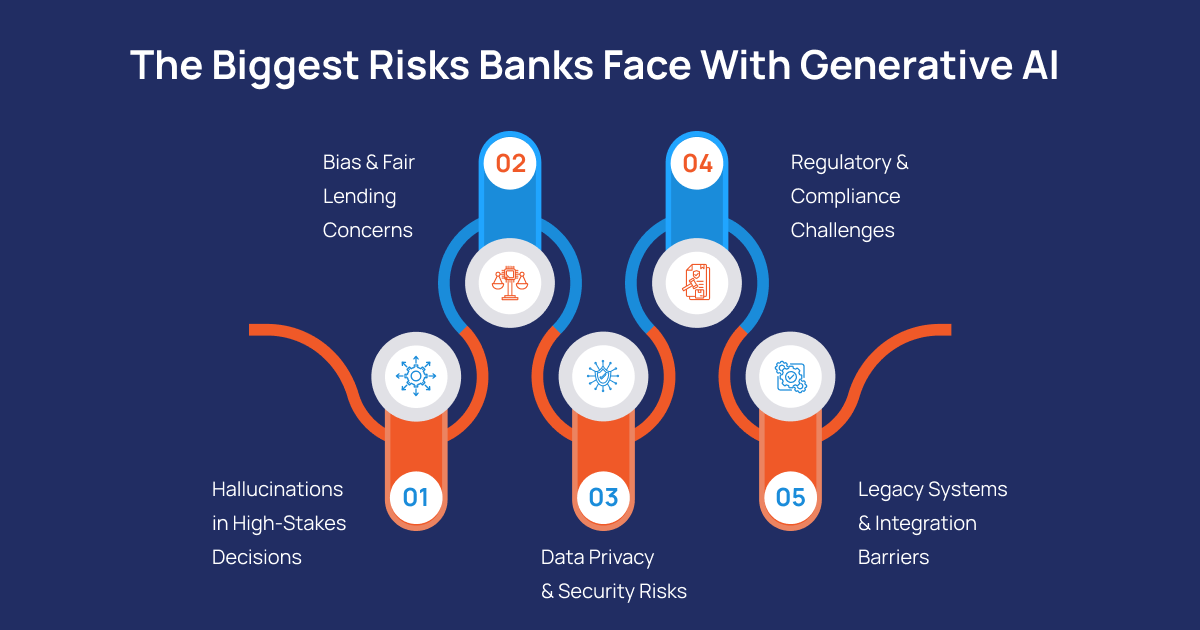

The Biggest Risks Banks Face With Generative AI

Hallucinations in High-Stakes Decisions

Bias and Fair Lending Concerns

Data Privacy and Security Risks

Regulatory and Compliance Challenges

Legacy Systems and Integration Barriers

Many banks still operate on legacy core banking platforms. Legacy systems GenAI integration remains complex, especially when real-time processing, auditability, and security controls are required without disrupting existing operations.

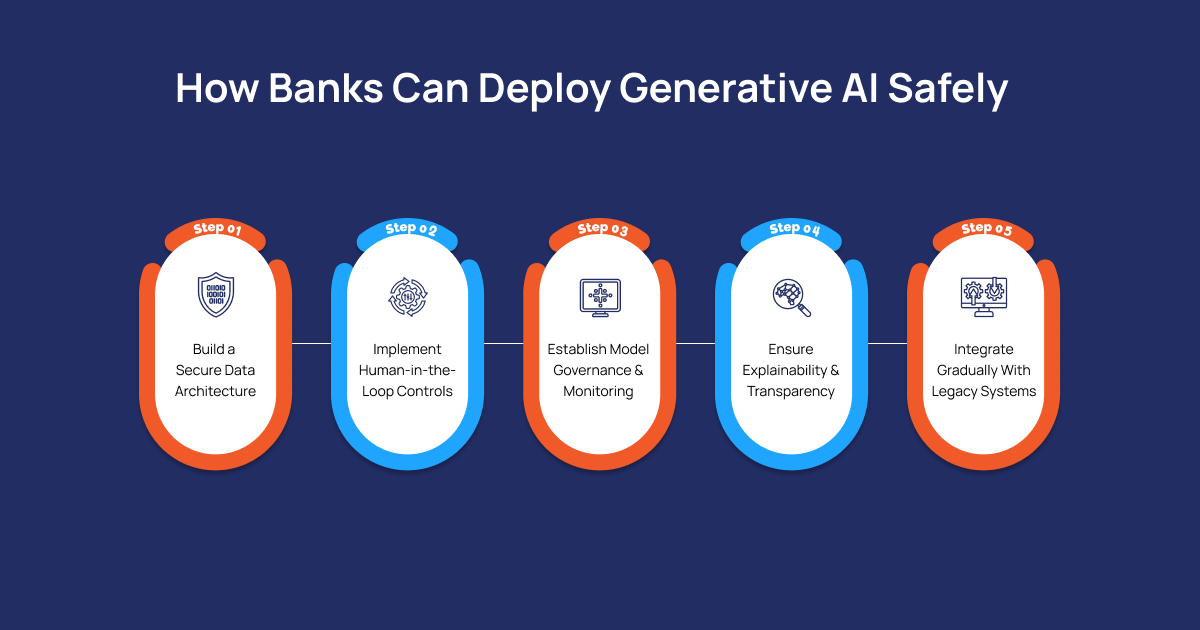

How Banks Can Deploy Generative AI Safely

Build a Secure Data Architecture

Implement Human-in-the-Loop Controls

Establish Model Governance and Monitoring

Ensure Explainability and Transparency

Integrate Gradually With Legacy Systems

Measuring ROI Without Compromising Compliance

Return on investment is a key concern for banking leaders. GenAI delivers ROI when it reduces operational costs, improves decision accuracy, and enhances customer experience without increasing risk exposure. Metrics should include efficiency gains, reduced fraud losses, improved resolution times, and fewer compliance incidents. Importantly, ROI should be assessed alongside risk mitigation outcomes, not in isolation.

Moving From Experimentation to Responsible Adoption

Conclusion

Generative AI has the potential to transform banking, but only when deployed with discipline and accountability. Hallucinations, bias, and compliance risks are not side issues; they are central challenges that determine whether AI banking transformation delivers value or creates exposure. Banks that treat generative AI as a controlled capability rather than an experiment will be better positioned to scale responsibly. By investing in secure architectures, human oversight, explainability, and governance, financial institutions can adopt GenAI with confidence while maintaining trust, regulatory alignment, and long-term resilience.

If hallucinations, regulatory risk, or legacy system constraints are slowing your GenAI initiatives, PiTech can help. Our banking-focused GenAI frameworks prioritise security, explainability, and compliance from day one.

Key Takeaways

- Generative AI brings real value to banking, but the risks are higher than in most industries: Hallucinations, bias, and weak governance can quickly turn innovation into regulatory exposure.

- GenAI should support decisions, not make them alone: Human-in-the-loop controls are essential for lending, fraud, and compliance use cases.

- Hallucinations and bias are business risks, not technical glitches:In banking, even a confident but wrong AI output can damage trust and trigger compliance issues.

- Secure data architecture and explainability are non-negotiable: Banks must always know where data comes from, how it’s used, and why a GenAI output was generated.

- Legacy systems don’t block GenAI—but rushed integration does: Gradual, API-led integration allows innovation without disrupting core banking operations.

- ROI only matters if compliance and trust are protected: Successful GenAI adoption balances efficiency gains with strong governance, transparency, and regulatory alignment.

Frequently Asked Questions (FAQs)

How can banks use GenAI for personalized loan recommendations without bias?

Banks can use GenAI to assist loan recommendations by combining structured financial data with contextual insights, while keeping humans in the decision loop. Bias is reduced by training models on diverse datasets, applying fairness testing, and ensuring GenAI outputs are reviewed rather than auto-approved. This approach allows personalized banking AI to enhance recommendations without violating fair lending regulations.

What are real-world examples of GenAI chatbots in retail banking?

In retail banking, GenAI chatbots are commonly used to summarise customer interactions, assist service agents, and answer routine queries such as account details or transaction status. These chatbots do not replace human agents but improve response speed and consistency, making them one of the most mature generative AI banking use cases today.

How does GenAI improve fraud detection in real-time transactions?

GenAI enhances fraud detection by analysing transaction behaviour patterns alongside traditional rule-based systems. It can identify subtle anomalies and evolving fraud tactics in near real time. When combined with alerts and human review, GenAI fraud banking systems improve detection accuracy without increasing false positives.

Can GenAI automate compliance reporting for financial regulations?

GenAI can assist with compliance reporting by summarising regulatory documents, generating draft reports, and mapping controls to regulations. However, final submissions must always be reviewed by compliance teams. This controlled use supports AI compliance finance requirements while maintaining accountability and auditability.

What risks do hallucinations pose in banking AI models?

Hallucinations can cause GenAI models to generate incorrect or misleading outputs with high confidence. In banking, this creates risks in areas such as lending decisions, regulatory interpretation, and customer communication. Without validation and monitoring, hallucinations can lead to compliance breaches and loss of trust.