Table of Contents

Summarize and analyze this article with

Introduction

Generative AI for banking is no longer just a futuristic buzzword. It’s already redefining the foundations of the modern banking sector. Banks can now enhance efficiency, analyze risk, forecast market trends, utilize AI for fraud detection in banking, and deliver personalized financial advice with the aid of AI.

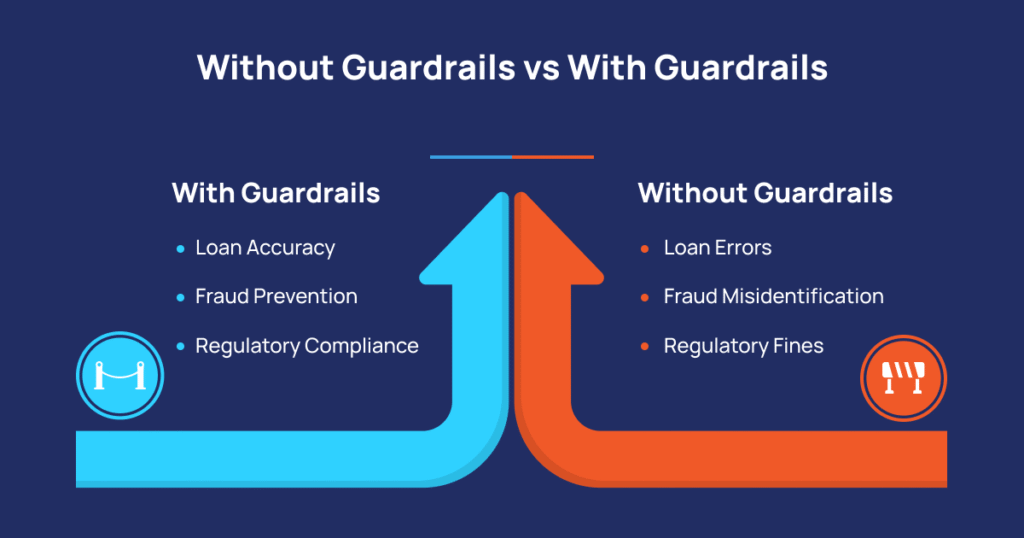

Yet, deploying LLMs in banking without proper safeguards can lead to errors, regulatory violations, and reputational damage. Financial institutions must implement guardrails for large language models to ensure responsible AI deployment and maintain AI compliance in banking.

This article explores the five essential guardrails for LLM deployment, why these safeguards are crucial, and the risks of operating without them.

Why Do We Need Guardrails?

Risks Without Guardrails

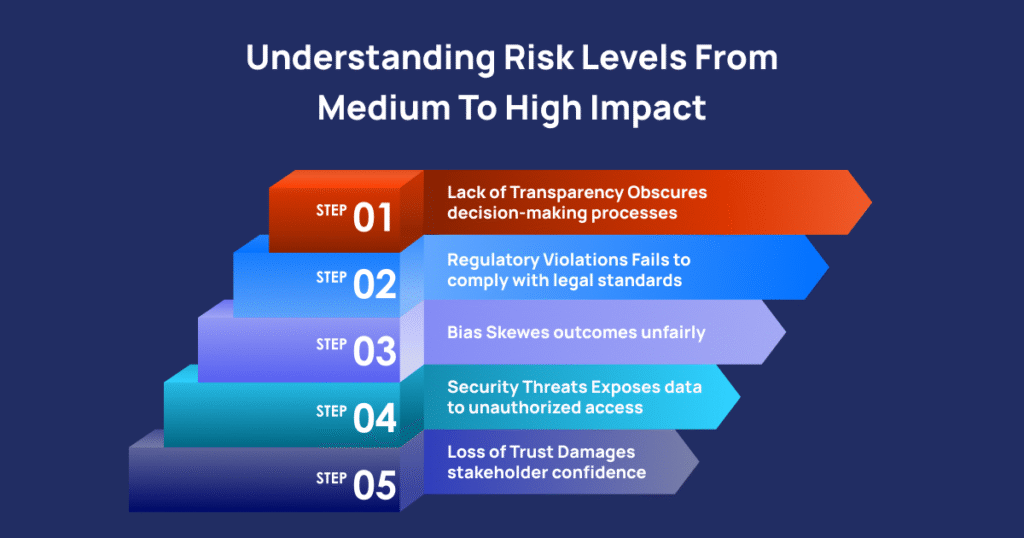

Without proper LLM guardrails, banks face multiple risks:

- Bias and Discrimination : LLMs trained on historical data may reproduce and amplify biases. This can lead to unfair loan denials, discriminatory risk assessments, and legal consequences.

- Lack of Transparency : AI systems often act as “black boxes.” Customers and regulators may not understand how decisions are made, undermining trust and accountability.

-

Security Threats: Without robust safeguards , LLMs are vulnerable to hacking, prompt injection, or adversarial attacks. Malicious actors could manipulate AI outputs to commit fraud or access sensitive data.

- Regulatory Violations : Banking is a heavily regulated sector. LLMs deployed without AI compliance may breach GDPR, AML laws, or fair lending regulations, risking fines and reputational damage.

- Loss of Trust : Customers expect Generative AI in financial services to be fair, accurate, and predictable. Unchecked AI errors or bias can erode confidence, increase churn, and harm brand reputation.

What is Generative AI in Banking?

Being within the realm of Artificial Intelligence (AI), generative AI (Gen AI) is powered by large language models (LLMs) that can generate human-like content (text, images, and more). Furthermore, generative AI for banking can provide personalized financial advice AI, automate routine tasks, interpret complex financial data, simulate market scenarios, and detect fraudulent transactions (AI for fraud detection in banking).

The 5 Essential Guardrails for LLM Deployment in Banking

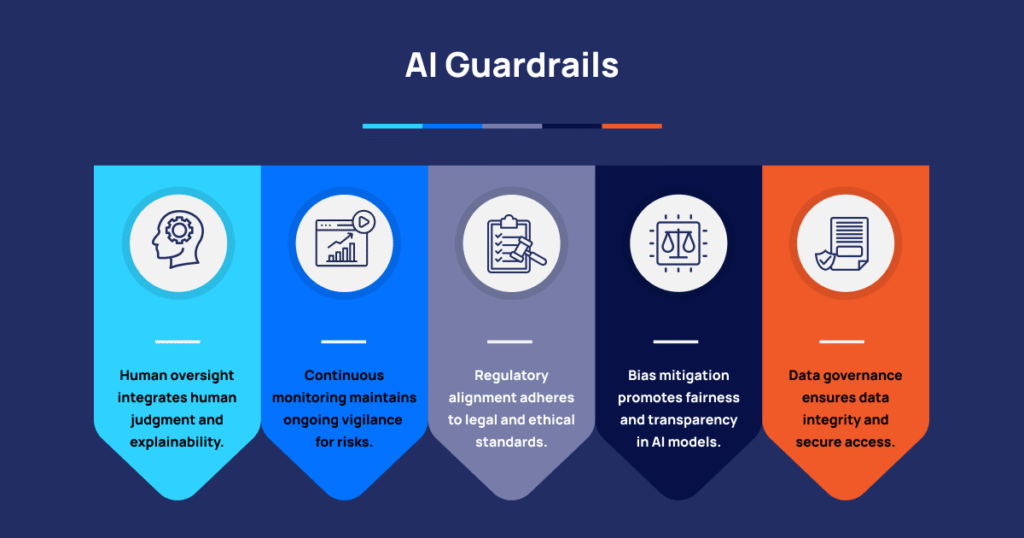

Implementing LLM guardrails ensures safe, compliant, and reliable AI usage in banking. These five measures are critical for responsible AI deployment :

1. Data Governance and Access Controls

Strong data governance is essential for responsible LLM deployment. Sensitive financial data must be protected to prevent misuse and ensure AI compliance in banking.

Key measures include:

- Role-based access controls (RBAC) for AI systems.

- Encryption of sensitive customer and transactional data.

- Data lineage tracking to ensure accountability and auditability.

2. Bias Mitigation and Model Transparency

Even advanced LLMs can produce biased outputs if trained on unbalanced datasets. In financial services, this may result in unfair credit decisions or discriminatory risk assessments.

Mitigation strategies:

- Use representative, diverse datasets for model training.

- Conduct regular bias detection and fairness audits.

- Implement explainable AI techniques for transparent decision-making.

Transparent LLM operations build trust with regulators, customers, and internal stakeholders.

3. Regulatory Alignment and AI Compliance Frameworks

The regulatory environment for AI in financial services is continuously evolving. Laws and guidelines like the EU AI Act, OCC directives, and NIST AI Risk Management Framework emphasize accountability, safety, and operational oversight.

Banks should:

- Integrate AI compliance in banking with existing governance programs.

- Maintain comprehensive documentation of data sources, assumptions, and model performance.

- Conduct cross-functional audits to ensure adherence to industry standards.

This ensures responsible AI deployment and reduces legal or operational risks.

4. Continuous Monitoring and Risk Management

LLMs are dynamic systems prone to drift or unexpected behavior over time. Continuous monitoring is essential to mitigate risks:

-

Real-time monitoring dashboards to detect anomalies or hallucinations.

- Feedback loops for retraining models with validated, accurate data.

- Risk management protocols for operational and security events.

Proactive monitoring ensures LLMs in banking remain secure, reliable, and accurate.

5. Human Oversight and Explainability

Despite advances in Generative AI, human oversight is critical. Humans-in-the-loop validate outputs, intervene in high-risk scenarios, and ensure accountability.

Best practices include:

- Review committees for AI decision validation.

- Explainable outputs for regulators, auditors, and customers.

- Override mechanisms for human operators to correct or halt AI decisions.

This ensures responsible AI deployment while maintaining trust and regulatory compliance.

How PiTech Enables Responsible AI in Banking

-

Data security orchestration to protect sensitive financial information.

- Bias and compliance auditing modules to maintain fairness and transparency.

- Real-time monitoring dashboards to detect anomalies and ensure reliable outputs.

With PiTech, banks can leverage Generative AI in financial services safely, balancing efficiency, compliance, and trust.

Conclusion

Deploying LLMs in banking without guardrails is a risk that can lead to errors, regulatory violations, and loss of trust. By implementing the five essential guardrails that are mentioned above,banks can ensure responsible AI deployment and maintain AI compliance in banking.

With PiTech, financial institutions can confidently deploy LLMs, securing data, reducing risk, and optimizing customer experiences while staying fully compliant. Generative AI in financial services can then deliver innovation, speed, and reliability without compromising trust or regulatory adherence.

Key Takeaways

- Implement Guardrails : Deploying LLMs without safeguards risks errors, bias, and regulatory violations.

- Protect Data : Strong data governance and access controls ensure sensitive financial information remains secure.

- Ensure Fairness & Transparency : Bias mitigation and explainable AI build trust with customers and regulators.

- Stay Compliant : Align LLM deployment with frameworks like the EU AI Act, HIPAA, and industry standards.

- Monitor & Manage Risks : Continuous monitoring, real-time feedback, and human oversight prevent inaccuracies and misuse.

Frequently Asked Questions (FAQs)

How can organizations ensure LLMs are compliant with regulatory requirements like the EU AI Act and HIPAA?

Organizations ensure LLM compliance by integrating AI compliance frameworks, maintaining documentation of data sources and outputs, conducting audits, and updating models to meet regulations like the EU AI Act and HIPAA.

What frameworks or guardrails are most effective for controlling LLM usage and preventing unauthorized actions?

How are risks like bias, model inaccuracies, and data leakage managed within AI governance platforms?

Risks are managed with bias detection, continuous model validation, data protection measures (encryption, anonymization, access controls), and real-time monitoring to ensure secure, accurate, and compliant LLM deployment.