Table of Contents

Summarize and analyze this article with

Introduction

Generative AI for banking is no longer just a futuristic buzzword. It’s already redefining the foundations of the modern banking sector. Banks can now enhance efficiency, analyze risk, forecast market trends, utilize AI for fraud detection in banking, and deliver personalized financial advice with the aid of AI.

With customer expectations higher than ever and financial challenges growing more complex, banks have no choice but to adopt advanced AI technologies. This transformation enables faster, smarter decisions and more inclusive, tailored services.

In this blog, we’ll explore how banks can safely integrate generative AI for banking, its transformative impact, recent developments, and practical applications. Discover how it’s reshaping customer experiences, streamlining workflows, and strengthening security and compliance.

Recent Developments in Generative AI for Banking

Banks and fintechs accelerated responsible AI adoption between 2024 and 2025. However, trends show a clear shift toward even faster, smarter, and more responsible AI innovation across the industry.

- JPMorgan Chase filed a patent for IndexGPT, an AI advisory system trained on market data and client profiles.

-

IBM watsonx.ai introduced banking-specific LLMs with compliance controls for private deployment.

- PwC and Accenture published frameworks for Responsible AI in Financial Services, focusing on fairness, explainability, and accountability.

- Regulators like the UK’s FCA support sandbox testing for safe AI experimentation.

What is Generative AI in Banking?

Being within the realm of Artificial Intelligence (AI), generative AI (Gen AI) is powered by large language models (LLMs) that can generate human-like content (text, images, and more). Furthermore, generative AI for banking can provide personalized financial advice AI, automate routine tasks, interpret complex financial data, simulate market scenarios, and detect fraudulent transactions (AI for fraud detection in banking).

Why Generative AI is Becoming Crucial in Banking?

How Can Generative AI Be Safely Integrated into Financial Workflows?

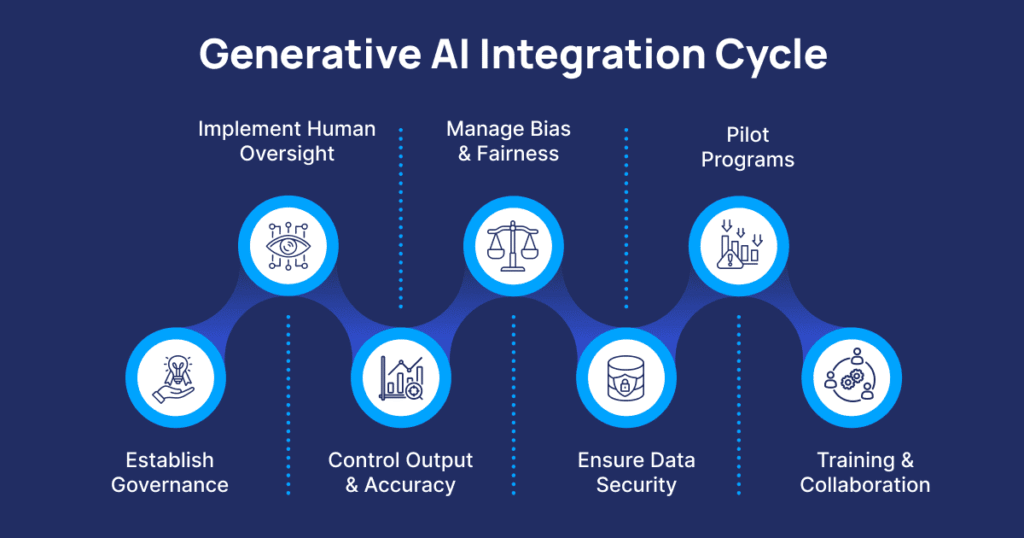

-

Governance & Oversight: Establish a cross-functional AI Governance team with legal, risk, compliance, and technology experts. Define clear policies, assign accountability, and classify AI workflows by risk. Throughout the AI lifecycle, this task force should monitor everything from design through deployment (Regulatory Compliance AI).

- Human-in-the-Loop: Ensure AI assists, but does not replace humans in critical decisions. Deploy generative AI for banking to draft reports, highlight risks, or rank applications. Always, a human reviewer must validate and approve outcomes.

- Controlled Output & Accuracy: Use structured prompts and templates to reduce hallucinations and enforce consistent output. Retrieval-Augmented Generation (RAG) can ground AI in verified internal knowledge or trusted external data, while explainable AI (XAI) ensures decisions are transparent and audit-ready.

-

Bias & Fairness Management: Regularly audit AI models to detect bias and ensure fairness, retraining or patching as necessary. Explainable reasoning helps maintain customer trust and Regulatory Compliance with AI.

- Data Security & Privacy: Deploy AI in secure private networks, anonymize sensitive information, and monitor access to prevent leaks or misuse.

- Pilot Programs & Incremental Deployment: Start with low-risk workflows, monitor outputs closely, and gradually expand to core banking processes.

- Training & Collaboration: Equip employees with AI literacy, foster collaboration between developers, compliance, and risk teams, and embed responsible practices from the start.

What Are Promising Use Cases for Generative AI in Financial Forecasting or Fraud Detection?

Beyond Banking AI Chatbots, AI-driven virtual financial advisors, and conversational AI in loan underwriting, generative AI for banking excels in predictive insights, scenario modeling, and proactive defense against fraud.

Key use cases include

Predictive Forecasting & Scenario Modeling

- Generative models ingest macroeconomic data, balance sheets, transaction volumes, lending cycles, and customer behavior to simulate forward paths. Projecting credit losses under recessionary stress is a good example to illustrate this.

- Some AI aggregators estimate that generative AI could add $200–$340B value per year in banking by 2030, largely through forecasting, risk optimization, and operational gains.

- Banks are also experimenting with macroeconomic simulation models powered by generative AI that can craft stress scenarios not present in historical data.

Fraud & Anomaly Detection

- Generative frameworks enable synthetic fraud generation (to expand training sets) and real-time anomaly explanation. For example, Mastercard’s new generative AI model claims to boost fraud-detection rates by 20% to 300% in some cases.

- Regulatory graph + GenAI systems can flag suspicious patterns and supply human-readable explanations aligned with compliance rules.

- Real-time transaction monitoring with hybrid graphs + LLMs is becoming operational in pilot settings for banking institutions.

- Document Automation & Insights: Use generative AI to auto-generate credit memos, risk reports, portfolio summaries, investment strategies, or regulatory filings. These reduce manual load and error.

- Application Modernization & Legacy Code Translation: Banks still run legacy systems. Generative AI can migrate or refactor code, translate COBOL to newer languages, or modernize APIs by cutting costs in IT operations.

- Conversational Finance: Generative models power richer conversational AI agents that deliver personalized advice, cross-sell offers, forecasting insights, or scenario analysis in dialogues.

How to Address Regulatory and Compliance Concerns in Applying LLMs in Banking?

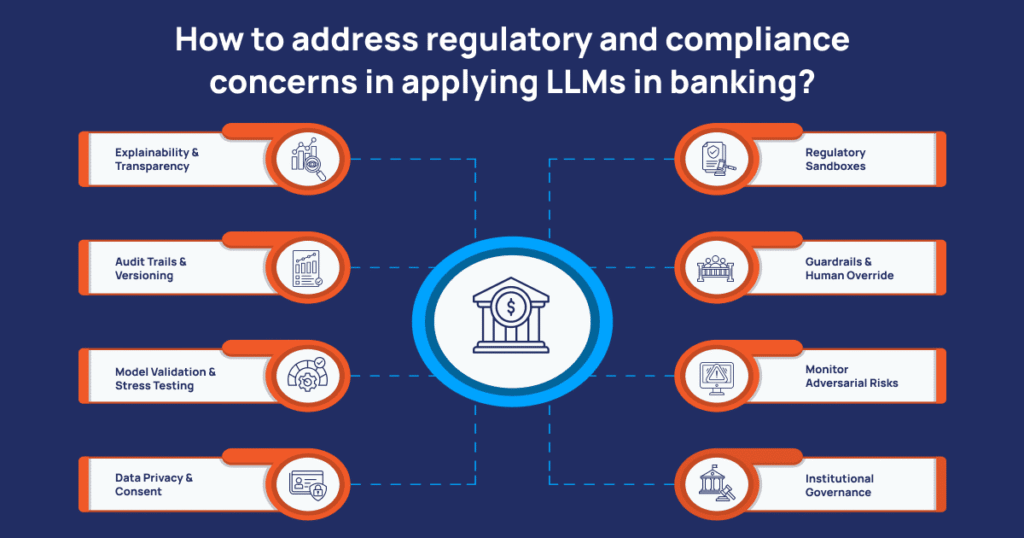

- Explainability & Transparency: Regulators often require that automated decisions (e.g., credit denial) be explainable. Use hybrid models (e.g., RAG, graphs) that can trace rationale and provide decision logic or supporting evidence.

- Audit Trails & Versioning: Log everything — prompts, knowledge sources, model version, intermediate embeddings. Maintain immutable audit logs that can be reviewed by compliance teams (Regulatory Compliance AI).

- Data Privacy, Consent & Masking: Train or fine-tune models using anonymized, synthetic, or consented customer data. Protect PII strongly. Use synthetic datasets or differential privacy when possible.

- Regulatory Sandboxes & Pilot Approval: Many regulators now support sandbox frameworks for new AI applications (e.g., UK FCA sandbox). Banks should submit proposals, monitor outcomes under oversight, and expand gradually.

- Guardrails, Safety Layers & Human Override: Incorporate deterministic guardrails (e.g., block transfers above threshold without manual confirmation) and always provide “fallback to human” on uncertain outputs.

- Monitor Adversarial / Deepfake Risks: Generative AI banking chatbots can themselves be abused. Financial regulators are issuing warnings about AI-powered scams (deepfake voice, synthetic identity fraud).

-

Institutional Governance: Set up an AI ethics & governance board,model risk teams, and embed compliance into every AI project from conception to retirement.

What Practical Experiments Can Banks Run with Generative AI in Regulatory Sandboxes?

Successful AI adoption comes from well-scoped experiments. Here are sandbox-friendly pilot banks can try:

- Document & Report Summarization: Test generative AI for banking to summarize regulatory filings, consent letters, risk reports, or credit memos. Low financial risk, but great for measuring accuracy, hallucination rates, and review cycles

- Internal Knowledge Assistants: Deploy AI Customer Support Banking agents for internal teams (compliance, HR, legal) to query policy documents, regulations, frameworks, or internal knowledge bases.

- Conversational Banking Assistants (Advisory Mode): Pilot AI-driven virtual financial advisors that suggest actions (e.g., budgeting, saving, product offers) without executing transactions. Collect feedback, measure trust, and compare outputs with human advisors.

- Scenario Generation / Forecasting Sandbox: Allow the model to generate macro or credit stress scenarios (AI in Financial Forecasting).

- Fraud Simulation & Synthetic Data Generation: Generate synthetic fraud samples to augment existing models (AI Fraud Detection).

- Compliance Explanation Engine: In sandbox mode, use graph + generative AI banking chatbots to flag suspicious activity and generate human-readable rationales tied to regulation sections.

- “Prompt-to-Report” for Analysts: Use generative interfaces for analysts: they write natural language prompts, get structured reports, charts, and insights. Some investment banks are already piloting this internally.

What Are Real Examples of Conversational AI Applications Improving Customer Experience in Banking?

Leading Examples Include:

-

Bank of America's Erica: Provides personalized financial advice, money-saving tips, and helps with checking account balances and transaction history.

- Ally Assist: Available 24/7 on Ally Bank’s website and mobile app, assisting with deposits, account history, and other routine AI Customer Support Banking tasks.

- Capital One's Eno: Monitors spending, checks bills, and helps pay for loans, mortgages, and other expenses.

- NatWest's Cora: Initially handled basic queries, now uses LLMs for complex tasks, including sensitive fraud claims, reducing handoffs to human agents (AI for fraud detection in banking).

- DBS Bank's digibot: Handles banking tasks via text or voice commands on the app or website, including checking balances and transferring funds (Banking AI Chatbots).

- Goldman Sachs’ GS AI Assistant: Summarizes documents, drafts memos, and analyzes data for employees, improving internal efficiency.

- UBS’ AI Analyst Avatars: Creates AI-powered analyst clones to produce reports and video content, freeing analysts for higher-value tasks.

- Ryt Bank’s AI: LLM-native agentic framework enabling customers to perform core banking tasks through a single dialogue with built-in guardrails and audit-ready logging.

Future Outlook: Generative AI, Predictive Analytics, and Human Expertise

- Autonomous Advisors: Hybrid agents forecast risks and recommend actions in real-time.

- Cognitive Compliance Systems: AI drafts filings, audits decisions, and flags breaches autonomously.

- Hyper-Personalised Banking: Tailored portfolios, alerts, and budgeting advice generated dynamically.

Conclusion

- 24×7 AI assistants that reduce load on support teams (Banking AI Chatbots, AI Customer Support Banking)

- Faster, data-backed underwriting (AI Loan Underwriting) and AI for fraud detection in banking

- Personalized AI Financial Advice at scale

- Cost reduction and operational agility

- Compliance support and regulatory readiness (Regulatory Compliance AI)

But the road to adoption must be cautious. Start with controlled experiments, embed human oversight, build observability, and always tie projects to business value.

Accelerate your banking transformation with PiTech. We deliver defense-grade security, ensure compliance, and provide scalable, measurable solutions to modernize your bank and stay future-ready.

Frequently Asked Questions (FAQs) on Generative AI for Banking

How can banks safely integrate generative AI without compromising compliance or data privacy?

What are the most practical use cases of generative AI in banking today?

Key use cases include: predictive financial forecasting, fraud detection, scenario modeling, document automation, legacy system modernization, and conversational AI for customer support and advisory services.